中园建设银行官方网站百度seo官方网站

文章目录

- 一、 Himmelblau 优化

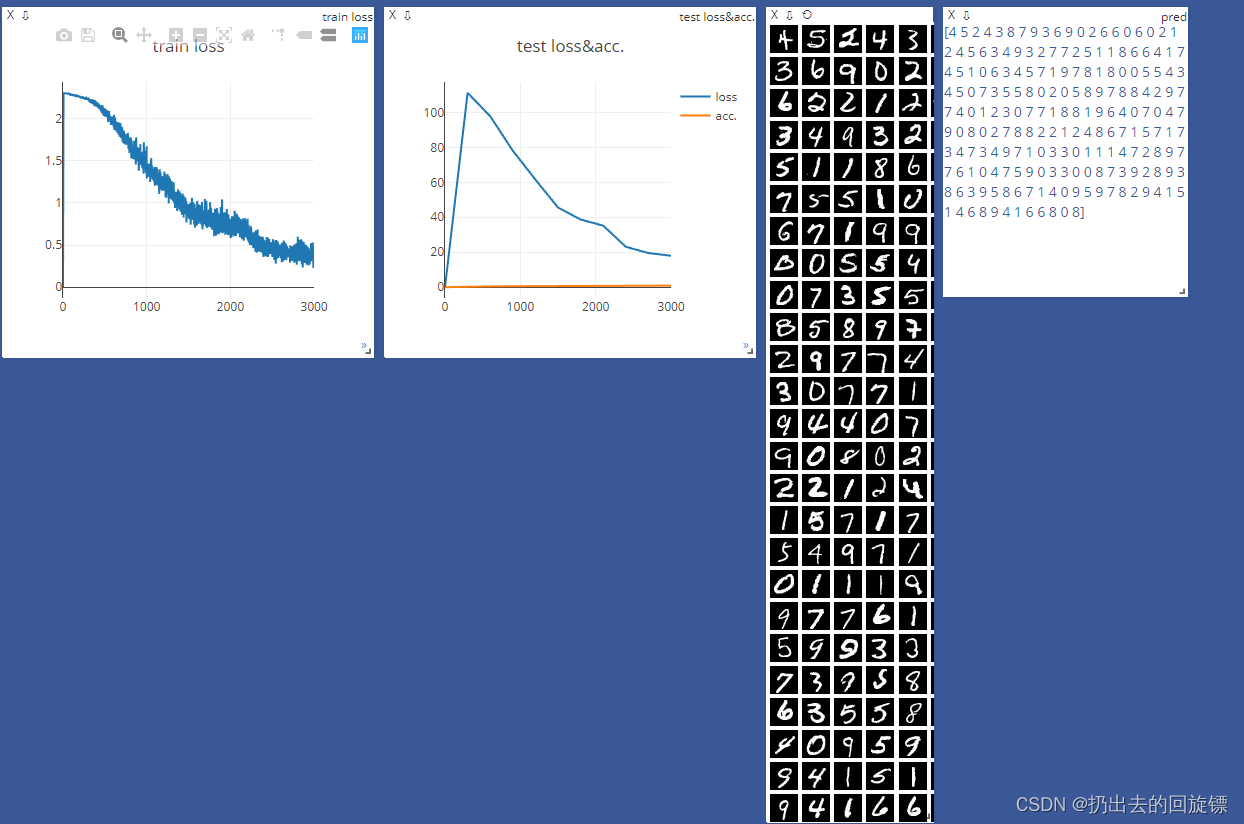

- 二、多分类实战-Mnist

- 三、Sequential与CPU加速-Mnist

- 四、visidom可视化

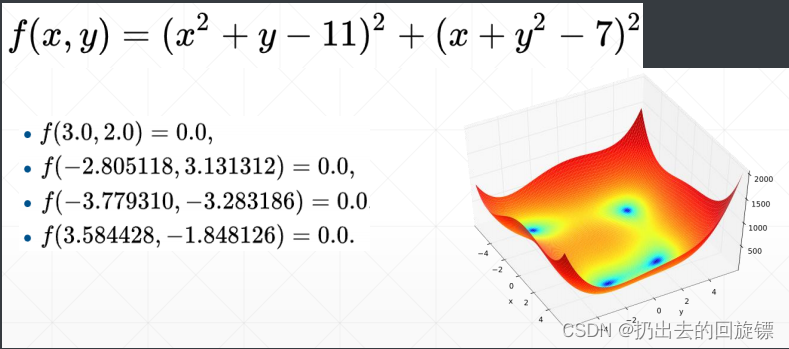

一、 Himmelblau 优化

Himmelblau 是一个具有4个最优值的2维目标函数。其函数和最优值点如下:

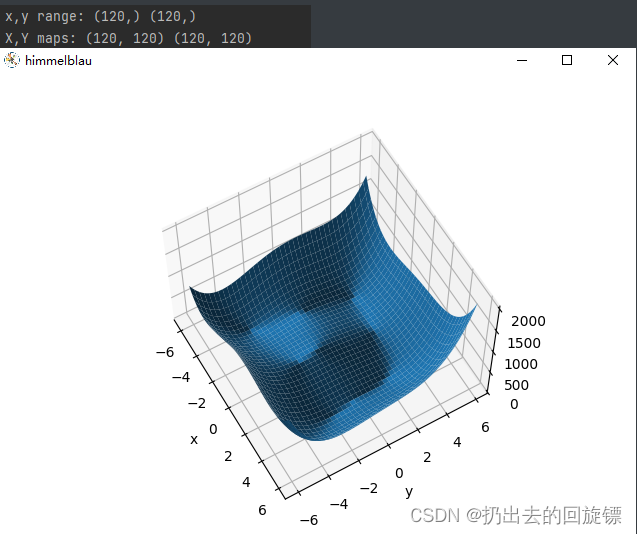

图象绘制:

import numpy as np

from matplotlib import pyplot as pltdef himmelblau(x):return (x[0] ** 2 + x[1] - 11) ** 2 + (x[0] + x[1] ** 2 - 7) ** 2x = np.arange(-6, 6, 0.1)

y = np.arange(-6, 6, 0.1)

print('x,y range:', x.shape, y.shape)

X, Y = np.meshgrid(x, y)

print('X,Y maps:', X.shape, Y.shape)

Z = himmelblau([X, Y])fig = plt.figure('himmelblau')

ax = fig.add_subplot(projection='3d')

ax.plot_surface(X, Y, Z)

ax.view_init(60, -30)

ax.set_xlabel('x')

ax.set_ylabel('y')

plt.show()

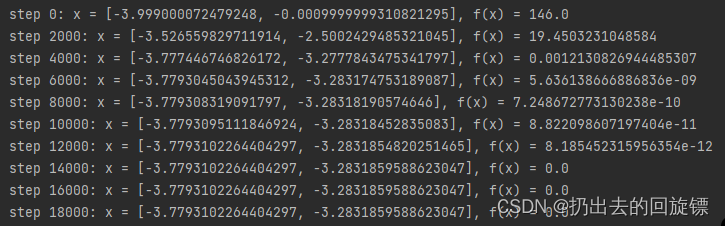

Gradient Descent:

# [1., 0.], [-4, 0.], [4, 0.]

x = torch.tensor([-4., 0.], requires_grad=True)

optimizer = torch.optim.Adam([x], lr=1e-3)

for step in range(20000):pred = himmelblau(x)# 清空各参数的梯度optimizer.zero_grad()pred.backward()# 优化器更新参数x'=x-lr*梯度optimizer.step()if step % 2000 == 0:print ('step {}: x = {}, f(x) = {}'.format(step, x.tolist(), pred.item()))

给予x不同的初始化位置可以得到不同的收敛结果和次数。说明初始位置的选择对于收敛的过程和结果非常重要。

二、多分类实战-Mnist

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transformsbatch_size=200

learning_rate=0.01

epochs=10train_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=True, download=True,transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=False, transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)#Network Architecture

w1, b1 = torch.randn(200, 784, requires_grad=True),\torch.zeros(200, requires_grad=True)

w2, b2 = torch.randn(200, 200, requires_grad=True),\torch.zeros(200, requires_grad=True)

w3, b3 = torch.randn(10, 200, requires_grad=True),\torch.zeros(10, requires_grad=True)

#kaiming初始化

torch.nn.init.kaiming_normal_(w1)

torch.nn.init.kaiming_normal_(w2)

torch.nn.init.kaiming_normal_(w3)def forward(x):x = x@w1.t() + b1x = F.relu(x)x = x@w2.t() + b2x = F.relu(x)x = x@w3.t() + b3x = F.relu(x)return xoptimizer = optim.SGD([w1, b1, w2, b2, w3, b3], lr=learning_rate)

# cross-entropy 等同于 softmax + log + nll_loss三个和

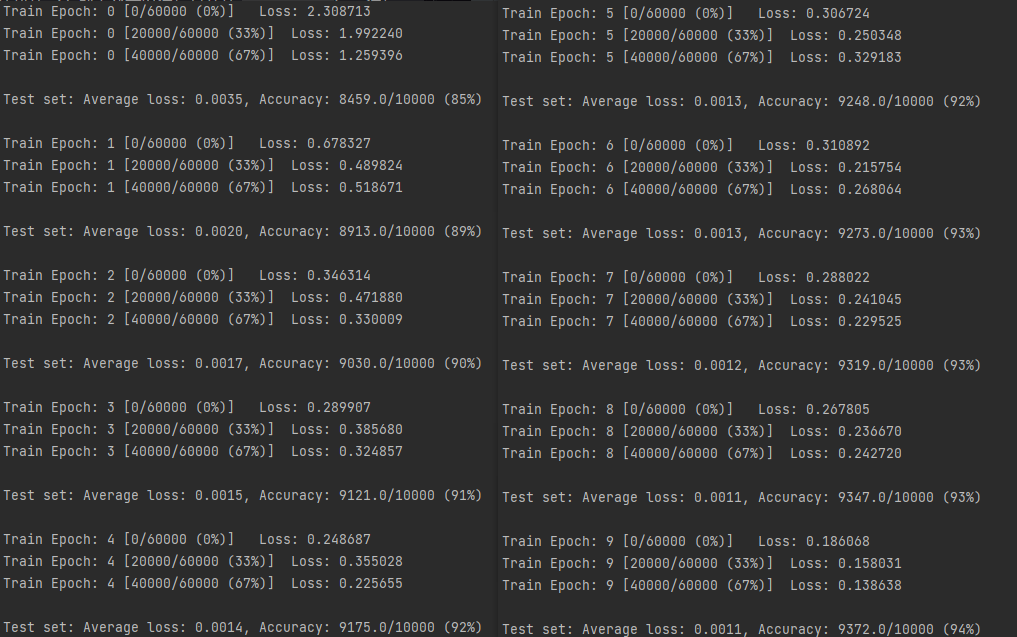

criteon = nn.CrossEntropyLoss()for epoch in range(epochs):for batch_idx, (data, target) in enumerate(train_loader):data = data.view(-1, 28*28)logits = forward(data)loss = criteon(logits, target)optimizer.zero_grad()loss.backward()optimizer.step()if batch_idx % 100 == 0:print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(epoch, batch_idx * len(data), len(train_loader.dataset),100. * batch_idx / len(train_loader), loss.item()))test_loss = 0correct = 0for data, target in test_loader:data = data.view(-1, 28 * 28)logits = forward(data)test_loss += criteon(logits, target).item()pred = logits.data.max(1)[1]correct += pred.eq(target.data).sum()test_loss /= len(test_loader.dataset)print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(test_loss, correct, len(test_loader.dataset),100. * correct / len(test_loader.dataset)))

注意事项:

- Batch_Size太小导致收敛过慢,太大导致易陷入sharp minima,泛化性不好

- 注意初始化这个关键步骤

三、Sequential与CPU加速-Mnist

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transformsbatch_size=200

learning_rate=0.01

epochs=10train_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=True, download=True,transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=False, transform=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)class MLP(nn.Module):def __init__(self):super(MLP, self).__init__()self.model = nn.Sequential(nn.Linear(784, 200),nn.LeakyReLU(inplace=True),nn.Linear(200, 200),nn.LeakyReLU(inplace=True),nn.Linear(200, 10),nn.LeakyReLU(inplace=True),)def forward(self, x):x = self.model(x)return xdevice = torch.device('cuda:0')

net = MLP().to(device)

optimizer = optim.SGD(net.parameters(), lr=learning_rate)

criteon = nn.CrossEntropyLoss().to(device)for epoch in range(epochs):for batch_idx, (data, target) in enumerate(train_loader):data = data.view(-1, 28*28)data, target = data.to(device), target.cuda()logits = net(data)loss = criteon(logits, target)optimizer.zero_grad()loss.backward()optimizer.step()if batch_idx % 100 == 0:print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(epoch, batch_idx * len(data), len(train_loader.dataset),100. * batch_idx / len(train_loader), loss.item()))test_loss = 0correct = 0for data, target in test_loader:data = data.view(-1, 28 * 28)data, target = data.to(device), target.cuda()logits = net(data)test_loss += criteon(logits, target).item()pred = logits.argmax(dim=1)correct += pred.eq(target).float().sum().item()test_loss /= len(test_loader.dataset)print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(test_loss, correct, len(test_loader.dataset),100. * correct / len(test_loader.dataset)))

注意事项:

- MLP Class中对继承自父类的属性进行初始化,而且是用父类的初始化方法来初始化继承的属性。

- Sequential 本质是一个可以添加组件的模块,输入通过组成的流水线后得到输出

- 对于单卡计算机而言,使用torch.device(‘cuda’) 与 torch.device(‘cuda:0’)相同

四、visidom可视化

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from visdom import Visdombatch_size=200

learning_rate=0.01

epochs=10train_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=True, download=True,transform=transforms.Compose([transforms.ToTensor(),# transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(datasets.MNIST('../data', train=False, transform=transforms.Compose([transforms.ToTensor(),# transforms.Normalize((0.1307,), (0.3081,))])),batch_size=batch_size, shuffle=True)class MLP(nn.Module):def __init__(self):super(MLP, self).__init__()self.model = nn.Sequential(nn.Linear(784, 200),nn.LeakyReLU(inplace=True),nn.Linear(200, 200),nn.LeakyReLU(inplace=True),nn.Linear(200, 10),nn.LeakyReLU(inplace=True),)def forward(self, x):x = self.model(x)return xdevice = torch.device('cuda:0')

net = MLP().to(device)

optimizer = optim.SGD(net.parameters(), lr=learning_rate)

criteon = nn.CrossEntropyLoss().to(device)viz = Visdom()viz.line([0.], [0.], win='train_loss', opts=dict(title='train loss'))

viz.line([[0.0, 0.0]], [0.], win='test', opts=dict(title='test loss&acc.',legend=['loss', 'acc.']))

global_step = 0for epoch in range(epochs):for batch_idx, (data, target) in enumerate(train_loader):data = data.view(-1, 28*28)data, target = data.to(device), target.cuda()logits = net(data)loss = criteon(logits, target)optimizer.zero_grad()loss.backward()optimizer.step()global_step += 1viz.line([loss.item()], [global_step], win='train_loss', update='append')if batch_idx % 100 == 0:print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(epoch, batch_idx * len(data), len(train_loader.dataset),100. * batch_idx / len(train_loader), loss.item()))test_loss = 0correct = 0for data, target in test_loader:data = data.view(-1, 28 * 28)data, target = data.to(device), target.cuda()logits = net(data)test_loss += criteon(logits, target).item()pred = logits.argmax(dim=1)correct += pred.eq(target).float().sum().item()viz.line([[test_loss, correct / len(test_loader.dataset)]],[global_step], win='test', update='append')viz.images(data.view(-1, 1, 28, 28), win='x')viz.text(str(pred.detach().cpu().numpy()), win='pred',opts=dict(title='pred'))test_loss /= len(test_loader.dataset)print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(test_loss, correct, len(test_loader.dataset),100. * correct / len(test_loader.dataset)))